Ever delivered a beautiful optimization model… only to watch it die in a SharePoint folder?

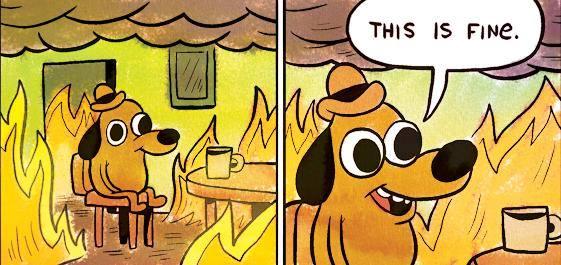

Welcome to the reality of operational decision support: it’s not the math that kills projects. It’s everything after the math.

We’ve built models that sliced millions off cost curves, balanced resource allocations down to the minute, and optimized flows with more elegance than a ballet. Still, some of the best ones never made it to production. Or worse – they did, and no one used them.

Let’s talk about why.

1. The Model Isn’t the Problem. Culture Is.

Optimization models are truth machines. They don’t care about titles, politics, or gut feel. They expose bottlenecks. Silos. Inconsistent KPIs. Sacred cows.

And that’s exactly why they get ignored.

In one project, our model suggested shutting down two expensive regional hubs. The math was flawless. The executive sponsor nodded. Then nothing happened.

Why? Because those hubs were built by the sponsor’s predecessor. Killing them wasn’t just a business move – it was a political firestorm.

It’s not a modeling problem. It’s a courage problem.

Takeaway: Before you fine-tune the solver, ask: who does this model make uncomfortable?

2. No One Owns the Data (So Everyone Blames the Model)

Your model is only as good as the inputs. But in most orgs, ownership of critical data is a mess. Legacy systems, shadow Excel sheets, half-automated ETLs – we’ve seen it all.

In one case, a forecasting model underperformed because of a 5-year-old rounding error in a manually-maintained Access database. That error was only spotted when the model flagged “weird behavior.”

The conclusion? “The model’s broken.” (It wasn’t.)

Takeaway: Garbage in, garbage out. Invest as much in aligning on trustworthy data flows as you do in your objective function.

3. Everyone Agrees – Until It Gets Real

During pilot phases, everyone’s excited. Then you suggest a change to KPIs. Or challenge someone’s forecast. Or recommend shutting down a process that “has always worked.”

Suddenly, the smiles vanish.

One client’s model showed that machine uptime – their north star metric – was actually destroying profitability. The optimal solution reduced uptime to allow for larger, more profitable batch runs. Operationally brilliant. Politically lethal.

Takeaway: The real optimization happens in the meeting after the model’s results are presented.

4. Modeling Requires Muscle, but Implementation Requires Stamina

There’s a reason so many optimization projects stall post-PoC.

You need:

- Clear decision rights: Who acts on the model’s output?

- Embedded ownership: Who maintains it?

- Business alignment: Do the incentives support the model’s recommendations?

Without these, even the best model becomes shelfware.

Takeaway: Delivering an optimization model is like handing someone a gym plan. Doesn’t mean they’ll show up and lift.

5. What to Do Differently?

Here’s what separates successful optimization projects from the graveyard crowd:

🧠 Translate, don’t just solve. Every shadow price needs a business story. Every constraint, a sponsor.

🗺 Map the stakeholder landscape. Know where resistance will come from – and why.

🔄 Build feedback loops early. Pilots should mimic reality, not utopia.

🪓 Slay sacred cows gently. Sometimes the best path is a quiet KPI redesign, not a public execution.

🪤 Anticipate objections. “This model is too complex.” “Our context is special.” Prepare counters grounded in empathy, not just math.

Final Thought

Optimization isn’t about getting the right number. It’s about making better decisions – repeatedly, under pressure, with incomplete data and imperfect politics.

That takes more than a model. It takes organizational maturity, trust, and yes – a bit of courage.

And no, courage can’t be coded in Python.